Windows Desktop VR

This is a write up on how a few years back I got my hands on Oculus DK2 and after a dozen frantic nights of coding I came up with this:

Disclaimer

At the time of recording of this video I no longer have access to DK2 headset so the footage is filmed running purely in desktop mode, with a simulated camera movement that follows the cursor.

So what are we looking at?

There are numerous other virtual desktop applications available right now and they definitely do work, but vast majority of them is just streaming the contents of your monitor into VR. And that’s quite limiting.

A 360° field of view VR desktop on the other hand is essentially an endless screen where you can arrange your applications both around and at any distance from you, and you can interact with them just like you would with a normal desktop — through mouse and keyboard.

The VR cursor is independent from the system one and moves freely on a sphere at a fixed distance from the camera. User is stationary but can look in all directions (a swivel chair with a keyboard tray would work perfectly here).

In VR, you can grab and move any window around, bring it forward and backward in fixed increments as well as angle it horizontally. Windows can also be and scaled up or down but I haven’t implemented resizing yet, though by carefully grabbing the very edge it’s technically possible, just that the virtual window won’t adjust its size accordingly. Finally, any single window can be quickly focused which brings it temporarily closer for easier viewing.

And you can definitely go overboard with it:

How it works

So to start off, this is a regular windows program that uses Direct X and Oculus DK2 SDK. Upon launch I get a list of all running applications and try to obtain their window handles, and if they meet certain criteria, I create a 3D mesh for them and add to the scene trying to avoid overlaps. Popups or child windows will be positioned relative to their parents, slightly in front— this allows things like tooltips or context menus to work correctly — and this means that I have to constantly monitor for newly added windows.

Window mesh is just a curved quad with a radius determined by how far away it sits from the camera — no magic there.

But how to display the contents of the application on my quad?

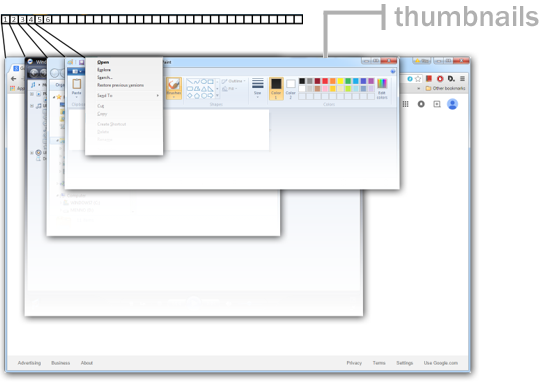

Attempt 1 | Thumbnails API

Windows SDK comes with an DwmRegisterThumbnailAPI that allows us to display an arbitrary window somewhere in our application. If we could do that and then fetch the pixel data, we could stream it to the GPU.

Turns out it’s not that simple. While the API sounds like it would paint another window somewhere onto ours, what it really does is just issue a request to the operating system to overlay that window’s thumbnail at a given position at the compositing stage later. What this means is that there is no way we can access actual contents of the thumbnail because it’s never blitted onto the drawing context of our program — it all comes out blank if you try to query it.

Ok, so that won’t work. But surely this will:

Attempt 2 | Print Screen

Yes! Print Screen will definitely capture pixels of the entire screen and let us retrieve them from the clipboard no matter what program is running. But this captures the entire desktop — with all windows overlapping one another, some potentially extending beyond the screen or minimized and we need contents of each program individually.

We could minimize all windows, bring one up, position it carefully and take a screenshot, then minimize it and do the same with the next, and the next, but that would be extremely slow and will probably interrupt execution of the VR program due to constant switching of focus and activation. And we would like each window to be updated live to keep the VR experience as close so the real thing.

Blitting using GDI suffers from same constraints and doesn’t perform much better either.

Attempt 3 | WM_PRINT?

Yeah, no. Most applications these days will ignore a WM_PAINT or WM_PRINT / WM_PRINTCLIENT request and even if they support it, if a window is obscured or partially extends beyond the edges of the screen, that part will most likely come out black.

But there is one thing that performs exactly what we need.

Attempt 4 | Enter Aero

Or Desktop Window Manager (DWM) to be exact — the hardware accelerated framework that powers Aero skin in Windows, and allows for all the smooth animations as well as this: Aero Flip.

One time I was flipping through the applications using Flip 3D and noticed that VLC player continued to play the movie perfectly fine, and it even continued to do so during the 3D transition after the window was selected.

So this couldn’t have been the Thumbnails API at work because that one was only capable of embedding 2D images. And the 3D animations strongly suggested that contents of each window are sent to the GPU in realtime and displayed as textured 3D meshes eventually.

If only I could get my hands on those textures…

Those textures are created by the DWM process.

Turns out I wasn’t the first one trying to pry it open and steal the goods. Steve Morin published source code of his dwmaxx utility which hooks onto DWM and injects overrides to D3D calls which was extremely helpful.

What dwmaxx does is it finds inside in-memory image of dwmcore.dll all function hooks to d3d10.dll and overrides them with custom methods. Now, for how this works exactly you better consult the expert directly but the technique is called Import Address Table hooking and it’s akin to vtable patching . Through PIMAGE_THUNK_DATA you are able to inspect imported functions from modules like dlls and substitute them with your own pointers creating a proxy layer if you wish. (There’s a recent article I have found that describes the process very well, check it out).

We know that DWM uses Direct3D to draw all the windows therefore it must issue a call to Draw at some point, passing the vertex information for the mesh. So if we replace it with our own version of Draw, inside it we could fetch currently bound texture handle and expose it to the outside:

Note that the handle must be of a shared type — otherwise different programs won’t have access to this resource. To ensure window textures are created as shared resources we must also override CreateTexture2D like so:

Cool, this way we are seeing what textures DWM is creating and what handles they have, so we could pass them now to our Oculus VR app and use for our 3D windows.

But wait, how do we know which texture corresponds to which program? All we see are just pointer values and vertex buffers, no mention of program name or window handle.

Dwmaxx has a watermarking feature which encodes hWnd of the window into the texture image itself as a special pixel, but sadly I could not get it to work reliably. So I had to find another way.

So let’s have another look at the Draw call. Surely that’s where the magic happens when Windows is about to draw each application on the screen. A window is just a quad and inside that method we have access to the vertex data so... how about we just check the coordinates of the vertices and compare them to the window coordinates obtained via GetWindowRect?

Brilliant!

Except, what’s that? A fullscreen window? Don’t they all have the same position and and size? Ugh!

Thumbnails API to the rescue!

So here’s where the initially useless DWM API for drawing thumbnails gets a chance to make it right. Due to the nature of how this API works, it effectively tells DWM to add extra geometry with the same texture as the main window at coordinates and size of our choosing. This is also why we couldn’t get any pixel data from it — because nothing was being painted.

So this means it must be drawing those thumbnails the same way it draws the real windows — and that is through Direct3D!

And it turns out that’s exactly what’s happening — for each thumbnail we request to be displayed, a call to Draw will happen with the vertex data for that thumbnail.

Hmm. So what if we did this:

What if we create a window where we will request a thumbnail drawn for each application we are interested in, 1x1 pixel big at a unique position on the screen — let’s say at the very bottom (y = 1199). And because we are in charge where which thumbnail will go, we could later look up inside the Draw function to which application that unique coordinate belonged.

For example, Paint.exe we will draw at (1,1199), Notepad.exe will go to (2,1199), Chrome.exe will go to (3,1199) and so on.

And in the hijacked DWM process we will store all texture handles in a map if we detect they are 1 pixel big and drawn at row 1199. Now, to retrieve them from the Oculus application I am sending a thumbnail’s position I would like a texture handle of through an RPC using PostMessage to a special window that dwmaxx spawns when it attaches to DWM, in which a custom WndProc receives the request, looks up the handle in the map at that position and sends it back as a LRESULT .

NOTE: Thumbnail API will only issue a draw call if the thumbnail is within the screen boundaries, has non-zero size and the window is not hidden. So we can’t place this “thumbnails palette” window outside of the screen or minimize it for example, it must be visible. But we can reduce opacity to 1% — and that effectively hides it.

And now we can display live updated windows inside our custom VR app.

Ok, and how does the input work?

Making interactions with the virtual windows work the exact same way as natively was a bit of a challenge on its own.

At first I tried simulating mouse input via SendInput Win32 API, but while this allows you to position the cursor and even issue a button press, the window you’re interacting with must be on top and within the boundaries of the screen and it’s doesn’t trigger hover events properly.

Another method I used was to send WM_* messages directly to the window handles:

SendMessage(targetWindow, WM_LBUTTONDOWN, 1, mousePos);But this only worked for some applications, and often when the window was minimized or extending beyond the screen it would not behave predictably. It also only supported clicks, but I also wanted to simulate hover events and while WM_MOUSEHOVER exists, most programs I tried ignored it completely. WM_HOVER, WM_MOUSEENTER etc. worked for some windows and didn’t for others so it became a guessing game of what’s the right combination of messages that would cover most cases, if any.

So in the end I settled for one solution that I knew would work.

Instead of trying to fool programs into thinking I’m interacting with them, I let the OS do all the work.

I use the second monitor to place window currently under the cursor in VR at the top left corner and position system cursor at where the user is pointing in VR.

Calculating the exact cursor position is pretty straightforward. First in the VR space I perform a raycast from the camera through the 3d cursor and check the intersection against any of the window geometries. Then I find the normalized position of that point within the curved quad and translate it into absolute coordinates of the real window, relative to its top left corner.

When VR cursor is not over any application, I hide the system cursor and keep resetting its position to the middle of the primary screen (so that it doesn’t interfere with any of the running applications) just like an a First Person Shooter, and I keep track of the deltas each frame to move the 3D cursor in VR.

As soon as I detect cursor entering a 3D window, I bring that window to the top using this series of calls:

You would think that a simple BringWindowToTop or SetActiveWindow would do the trick but nooo, nothing is that simple in Win32 API. And even the above code doesn’t always work reliably — sometimes windows get stuck in the foreground no matter what.

I experimented with trying to minimize non-active programs, moving them out of the way or forcing them to the bottom of the z-order stack but all solutions had some side effects, like programs would stop updating or they would cause severe performance issues.

Keyboard inputs

Any window under the cursor gets focus, so it captures all keyboard and mouse events as per normal. VR app only listens to some global button presses to perform window manipulation like zooming in or tilting the windows but it never receives focus.

But here’s the challenge:

Once wearing the VR headset, how to actually use the keyboard?

While touch typing could definitely come in handy when interacting with text editors, more often than not user will need to perform some one off button presses, trigger shortcuts, type ad-hoc search queries and generally shift his hands a lot. Being able to see where the keys (and the keyboard for that matter) are would be of great help so that peeking from under the headset every minute doesn’t become second nature.

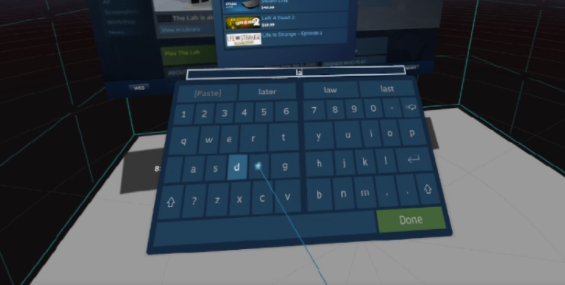

1. On-screen keyboard

Common solution used in VR environments is to display a virtual keyboard panel that could be interacted with via the controllers, typically using a laser pointer. It gets the job done for some sporadic text entry but isn’t practical for any kind of real world typing.

2. Video feed of the keyboard

I tried hooking up a webcam (albeit a low resolution one) and make it film the keyboard from above and stream it to the VR as just another window that would be placed horizontally just in front of the camera — and with some success I must say.

While you can’t really make out the letters on the keys, just seeing the hands against the layout of the keyboard is already a huge improvement, so much so that pressing any random key on demand becomes quite easy.

3. Kinect and 3D mapping

Next step would be to try pointing a 3D scanner, like the one in Kinect, at the keyboard and post-process the resulting point cloud into a simplified mesh representing the hands and the keyboard itself. Such scan won’t have high enough fidelity to capture all the buttons but if we could create a 3D model of the exact keyboard being used ahead of time and just align it properly in 3D space, we then just need to overlay the palms on top of it. (Here’s an example of Mr.doob experimenting with Kinect streaming)

I admit I haven’t tried this approach (and back in 2015 the off the shelf technology probably wasn’t sophisticated enough) but it would potentially produce a more natural feel than a straight up flat video.

Also, what Microsoft has done with spatial mapping in HoloLens could be useful here as well.

4. AR

Actually the best solution I feel would simply be using an AR headset where we could naturally see both of our hands, the keyboard but also the entire surroundings — so that we can grab that mug of coffee without spilling it all over the place.

In fact a VR headset with decent enough passthrough (like Oculus is starting to integrate in their headsets) will probably work too, though never as natural as true AR kit.

Limitations

Let’s face it — this whole project was a one giant hack. But it works and that’s where all the fun is, right?

After all the goal was to just experiment with what could be possible inside a virtual desktop, but also to explore the technical challenges on the way. It only works with Windows 7 and requires specific setup as described above so I won’t recommend anyone to try this at home.

Given that hijacking system libraries is necessary, of course this isn’t a viable solution for any practical applications but with Windows 10 new APIs were introduced which could make the whole process more legitimate.

Let’s also not ignore the security considerations — giving a 3rd party program full access to the contents of each application could get out of hand pretty easily, so a close collaboration with OS makers would most likely be necessary to integrate such a solution at a system level — robustly and securely.

Final Thoughts

So now the final question becomes: is it all worth it?

The possibilities that VR or AR working environments could bring are without a doubt exciting. This article goes over the most rudimentary one, basically bringing current flat desktop interface into a 360 space just to increase the real estate to put your windows on but to truly leverage the power of 3D interface we have to look at games and what kind of interactions and manipulation they offer that a traditional 2D UI can’t.

There is of course the human aspect to consider also — we are accustomed to viewing and manipulating documents in two dimensions — it’s just simpler to keep track of things when they’re simply in front of us. Introducing another dimension, while seemingly closer to the real world experience we have evolved in, often imposes extra cognitive load to keep things organized and easily accessible. After all, we don’t fancy having to physically walk around the office just to reach some documents or devices — it’s much more convenient to have them right where you sit.

So any 3D interface must be as simple to use as current 2D ones and must introduce significant added value to justify change in the way applications are designed and interact with one another UX-wise.

While the example discussed here was using a VR headset, the ideal implementation would involve some kind of AR glasses.

Advantages are numerous:

- Comfort — no more strapping a bulky headset with the rubber band eating into the skin, messing up the hair and feeling sweat building up around your face

- Can view your desktop anywhere-given that AR glasses are portable, they can just stream image from a server running the actual software-this should reduce computational requirements of the kit and increase battery life. Keyboard could simply be projected virtually on any surface with the headset tracking the fingers, a bit like IR keyboards worked back in the day

- You’re no longer isolated from the outside world — not only you can clearly see your keyboard, your desk with everything on it but colleagues can approach you for a quick chat without having to take you out of the virtual reality

- if the AR glasses simply become lightweight viewing terminals, it eliminates the need for monitors and even workstations. And while it’s definitely useful to have a physical monitor present to discuss things with several people, with clever UX multiple users could share content directly in their AR headsets, potentially even anchoring it in the real world space such that they can all point at things using gestures that everyone can see and even manipulate things collaboratively.

For how this could look like you could check out Iskander Utebayev’s concept video:

As it stands right now, the key limiting factor to any such interfaces is the resolution of the headsets. Even 4K display per-eye most likely won’t be enough to truly replicate current desktop experience. Due to the distortion of the lenses, to clearly represent a single native pixel you need at least 3x3 or 4x4 VR pixels in order to be able to read any text at a distance without having to zoom in all the way.

Field Of View in devices like HoloLens or Magic Leap are also a factor that severely limits the practicality of such a setup — standard monitors or especially ultrawide ones will provide the user a much better experience for any multi-window working environment. But this doesn’t mean that HoloLens isn’t ideal for other, more specific use-cases!

So, to close off this rather lengthy entry, I will keep a close eye on next generations of hardware, be it from Oculus, Microsoft, Apple or others and continue experimenting with the next step of evolution of a desktop user interface.

Thanks for reading and I hope you’re as excited as I am about what the future of VR holds, not only for home entertainment but at the workplace too!

If you would like to stay in touch, you can reach me at rein4ce@gmail.com or LinkedIn.